“To study persons is to study beings who only exist in, or are partly constituted by a certain language”.

-- Charles Taylor, Sources of the Self

Large language model-based AI (LLM’s) are the epitome of what can be constituted by language alone. They can easily take isolated linguistic philosophy to its absurd extreme.

Unfortunately, this means there is a disconnect between a statement that is correct in the context of its LLM and the statements we want, which appropriately address a scientific or clinical context in the world outside of the LLM. This results in AI dialog replies that currently make medical advisory AI impossible to trust.

Current LLM base AI’s are masters of what Harry G. Frankfurt called “bullshit.” Until AI can distinguish and eliminate fictional or obsolete diagnoses and treatments within its model, separating them from those which help the patient, it cannot be trusted with any unsupervised role in patient care.

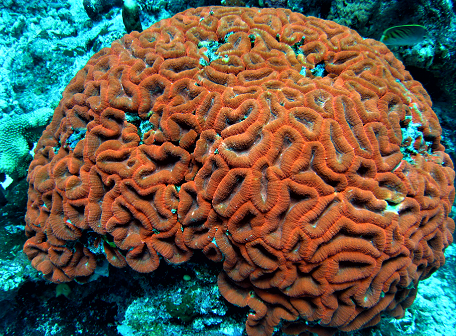

TROPICAL SYNAPSES

Reflections on topics including clinical neurology, recent publications in neuroscience,

philosophy of biology, "neuro-doubt" about modern media hype of new neuro-scientific procedures and methods, consciousness, scuba diving, horticulture, jazz, blues, slack key guitar music, the Hawai'i health scene, and whatever else dat's da kine...

Subscribe to:

Posts (Atom)

Risks for impaired post-stroke cognitive function

In a printed posted to the medRxiv preprint archive this month, I found a chart review of patients with stroke to determine factors (other t...

-

According to the Nobel-prize-winning work in the 1960's by Sperry and Gazzaniga , after the two cerebral hemisphere are cut by callos...

-

The Ship of Theseus is a classic thought experiment in Greek philosophy. As related by Plutarch the historian, Theseus' ship was kept as...

-

Sanboukan sweet lemon citrus tree grafts after a month, unwrapped today. The first graft looks more solidly healed than the second. We wi...